When it comes to graphics cards, more is nearly always better. More shaders, more RAM, more bandwidth – all of these mean better performance and higher detail settings in games. But what if you can only make the chips so big? How can you add more speed, if the processors are at their limits, in terms of size and transistor count?

Simple: add another chip! (not so simple as you will quickly learn.) Here's a brief stroll through the story of multi-GPU graphics cards, the true giants of performance, power, and price.

The Dawn of the Dual

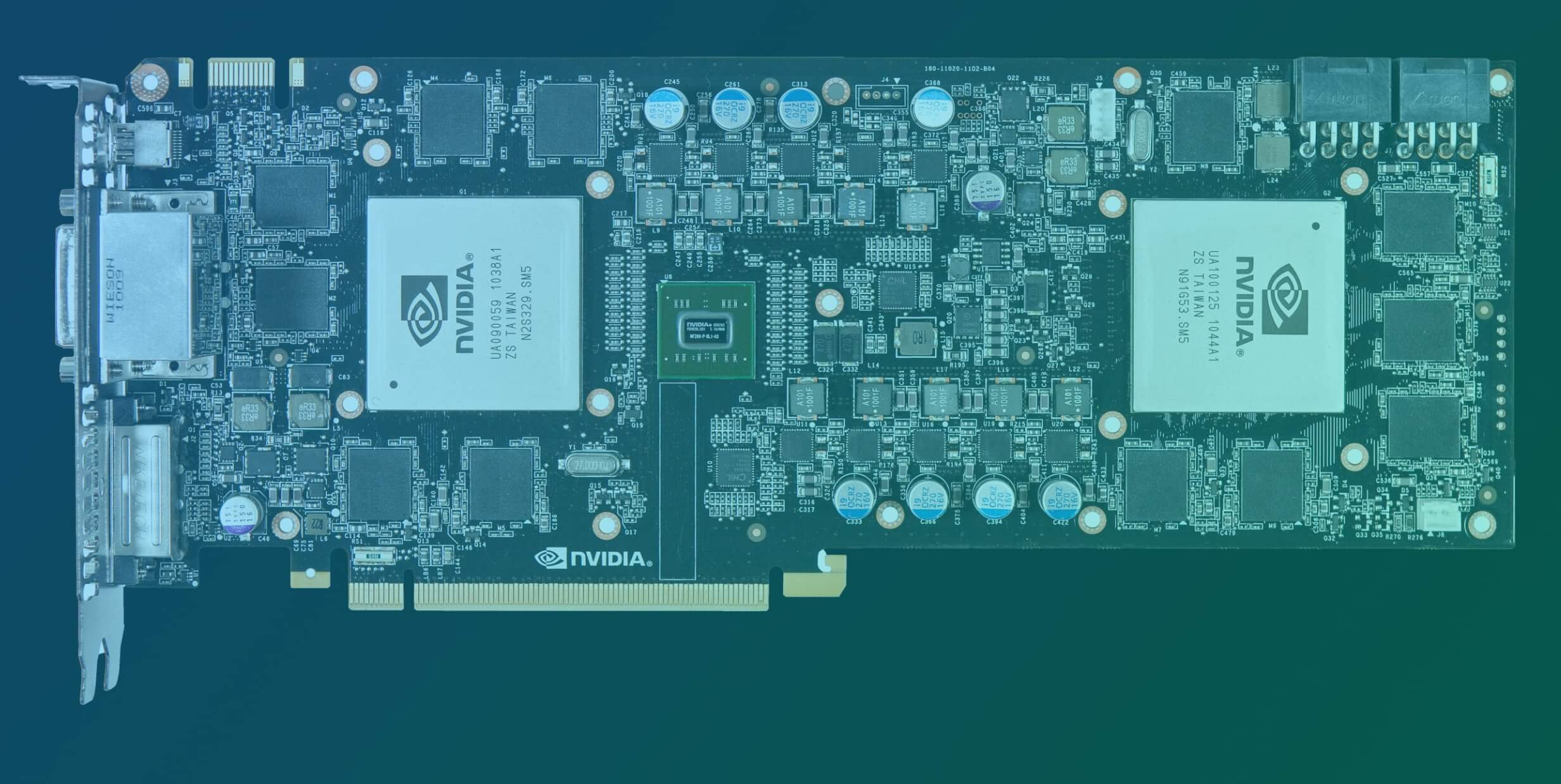

Before we head back in time to the beginning of our story, let's take stock of how the vast majority of graphics cards are equipped these days. On the circuit board, after you've stripped off the cooling system, you'll find a very large chunk of silicon – the graphics processing unit (GPU).

All of the calculations and data handling required to accelerate 2D, 3D, and video processing are all done by the one chip. The only other ones you'll find are DRAM modules, exclusively for the GPU to use, and some voltage controllers.

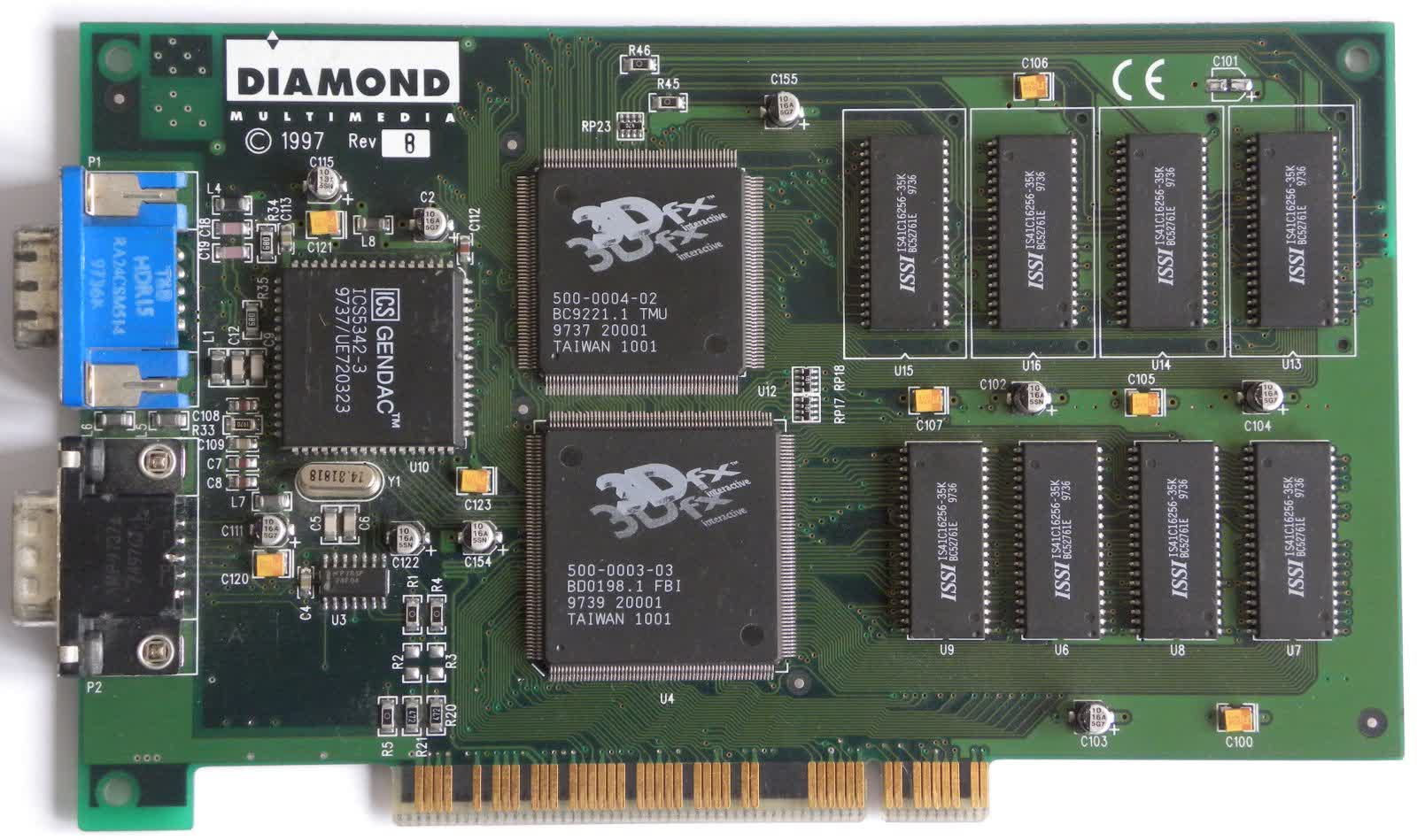

But it wasn't always like this. Some of the very first 3D graphics cards sported multiple chips, although these weren't really GPUs. For example, 3dfx's Voodoo 1 released in 1996 had two processors on the circuit board, but one just handled the textures, and the other blending pixels together.

Like so many early 3D accelerators, a separate card altogether was needed for 2D workloads. Companies such as ATI, Nvidia, and S3 focused the development of their chips to incorporate all of the individual processors into a single structure.

The more pieces of silicon a card sported, the more expensive it was to manufacture, which is why consumer-grade models quickly switched to single chips only. However, professional graphics cards of the same era as the Voodoo 1 often took a multi-chip approach.

Once the powerhouse of the professional rendering industry, 3DLabs built their reputation on monstrous devices, such as the Dynamic Pictures Oxygen 402, as shown below.

This card has two large chips, bottom left, for handling the 2D processing and video output, and then four accelerators (hidden underneath heatsinks) for all of the 3D workload. In those days, vertex processing was done on the CPU, which then passed the rest of the rendering on to the graphics card.

The Oxygen chips would turn the vertices into triangles, rasterize the frame, and then texture and color the pixels (read our rendering 101 guide for an overview of the process). But why four of them? Why didn't 3DLabs just make one massive, super powerful chip?

What Multi-GPU Offers

To understand why 3DLabs chose to go with so many processors, let's take a broad overview of the process of creating and displaying a 3D image. In theory, all of the calculations required can be done using a CPU, but they're designed to cope with random, branching tasks issued in a linear manner.

3D graphics is a lot more straightforward, but many of the stages require a huge amount of parallel work to be done – something that's not a CPU's forte. And if it's tied up handling the rendering of a frame, it can't really be used for anything else.

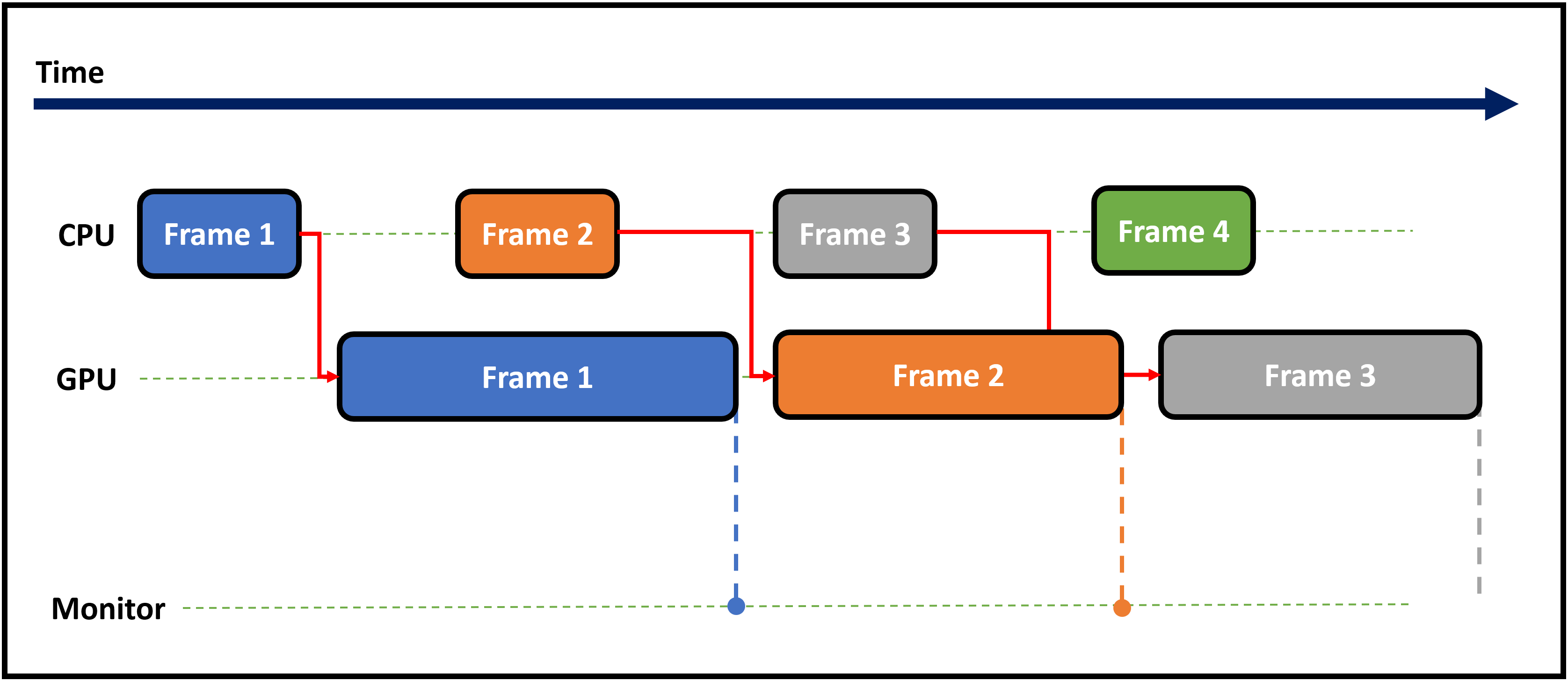

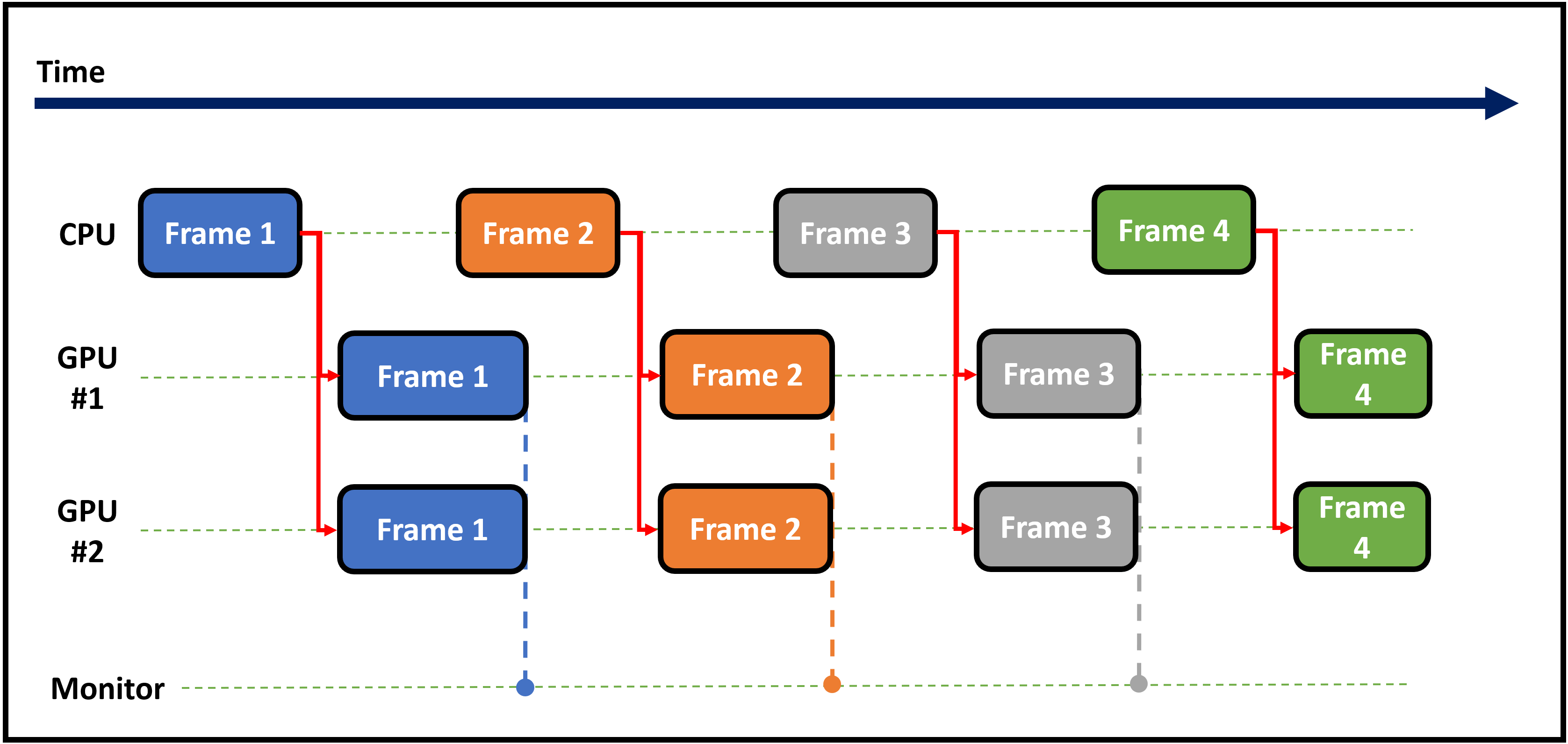

This is why graphics processors were created – the preparatory work for a 3D frame is still done using a CPU, but the math for the graphics itself is done on a highly specialized chip. The image below represents the timeline for a sequence of four frames, where the CPU generates the tasks required at set intervals.

Those instructions and information about what data is required is then issued to the graphics processor to grind through. If this takes longer than the time required for the next frame to be set up, then there will be a delay in the displaying of the next frame until the first one is finished.

Another way of looking at it is that the GPU's frame rate is lower than the CPU's rate. A more powerful graphics chip would obviously decrease the time required to render the frame, but if there are engineering or manufacturing limits to how good you can make them, what are your other options?

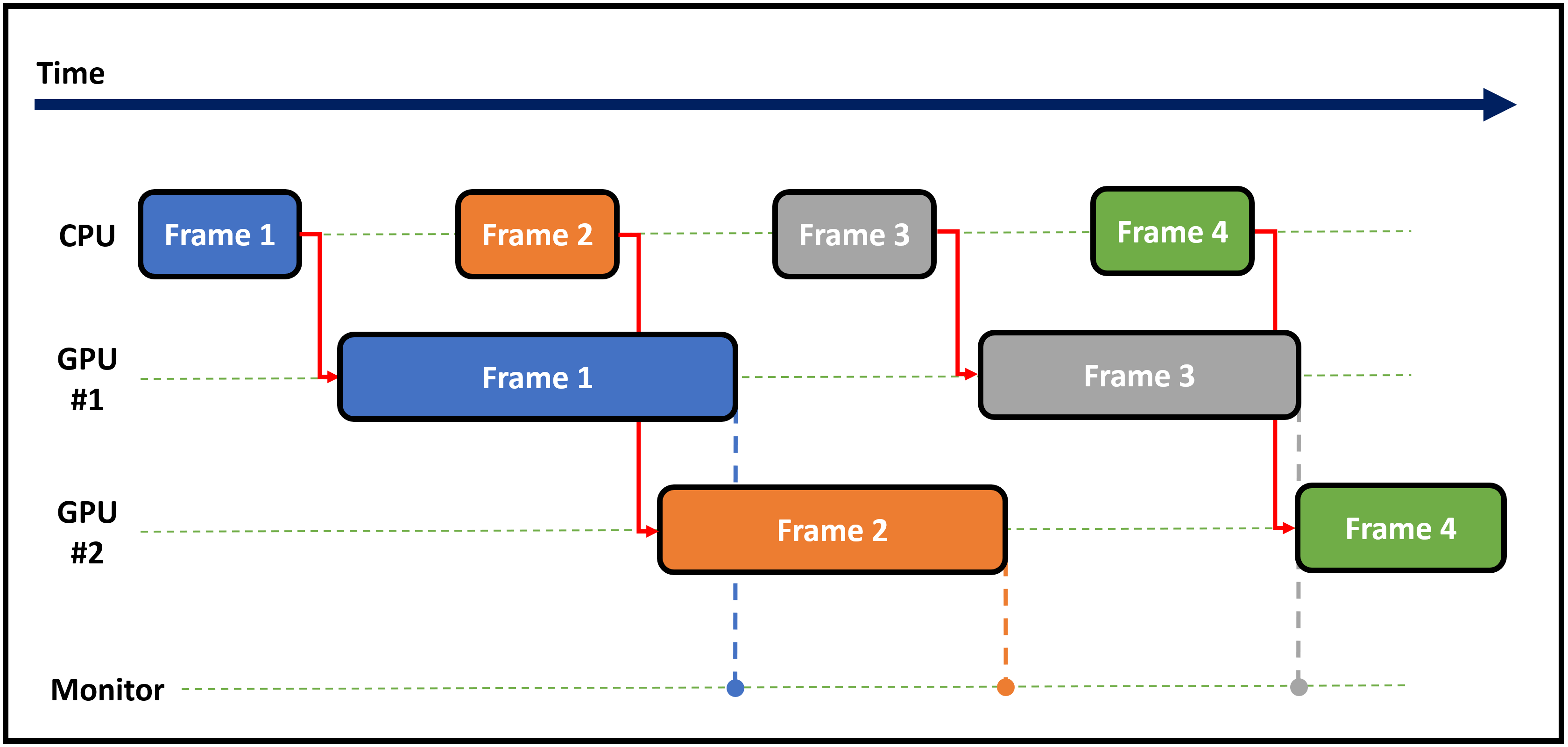

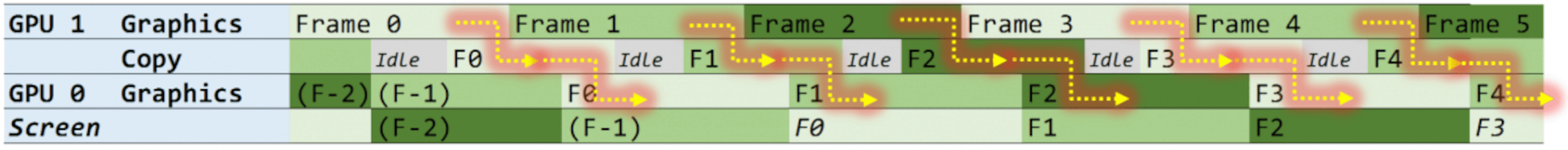

Well, there are two: (1) use another GPU to start on the next frame, while the other is still processing the first one, or (2) split the workload of a frame across multiple chips. The first method is generally known as alternate frame rendering or AFR, for short.

The above diagram shows roughly how this works in practice. You can see that the time gap between frames appearing on the monitor is smaller, compared to using just one GPU. The overall frame rate is better, although it's still slower than the CPU's.

And there's still a notable delay between the first frame being issued and it appearing on the screen – all caused by the fact that one GPU is still having to process the entire frame.

The other approach involves splitting the rendering tasks across two or more GPUs, sharing out sections of the frame in blocks (split frame rendering) or varying lines of pixels (as used with the Dynamics Oxygen card).

With this method, each frame is processed far quicker, reducing the delay between the CPU issuing the work and it being displayed. The overall frame rate might not be any better than when using AFR (possibly even a little worse), but it is more consistent.

Both of these techniques can be carried by using multiple graphics cards rather than multiple processors on one card – technologies such as AMD's CrossFire and Nvidia's SLI are still around, but have fallen heavily out of favor in the general consumer market.

However, for this article, we're only interested in multi-GPU products – graphics cards packing two or more processors, so let's dig into them.

Enter The Dragon(s)

3DLabs' hulking multi-GPU cards were incredibly powerful, but also painfully expensive – the Oxygen 402 retailed at $3,695, nearly $6k in today's money! But there was one company that offered a product sporting two graphics chips at an affordable price.

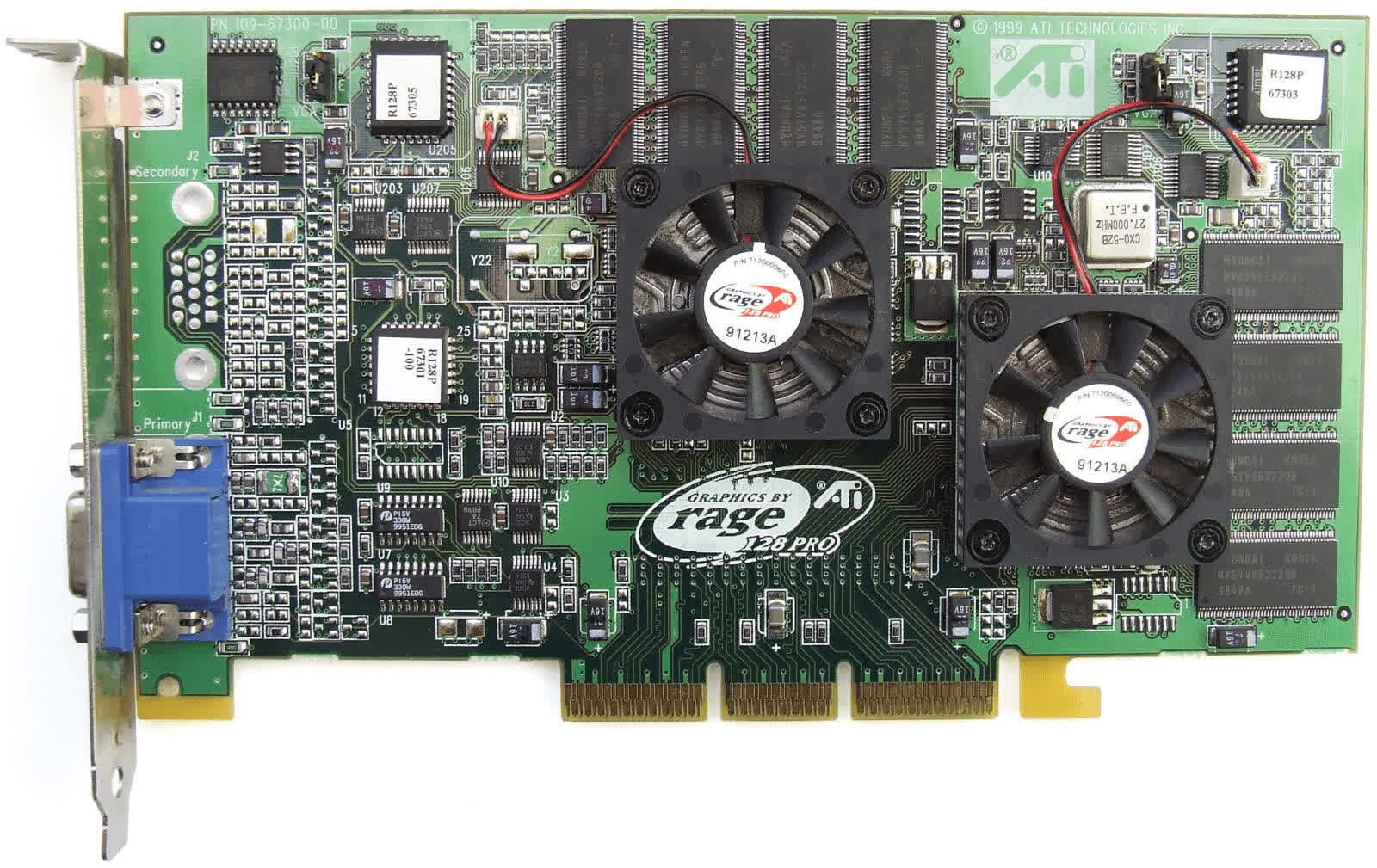

In 1999, two years on from the Oxygen 402, ATI Technologies released the Rage Fury MAXX. This Canadian fabless company had been in the graphics business for over 10 years by this point, and their Rage series of chips were very popular.

The MAXX sported two of their twin pipeline Rage 128 Pro chips, with each getting 32 MB of SDRAM to work with. It used the AFR method to push frames out, but it was noticeably outperformed by Nvidia's GeForce 256 DDR and cost $299, about $20 more than its rival.

Incidentally, it was the GeForce 256 that was the first graphics card to be promoted as having a GPU.

The term itself had been in circulation before this card appeared, but if we assume a GPU to be a chip that handles all of the calculations in rendering sequence (vertex transforms and lighting, rasterization, texturing and pixel blending), then Nvidia was certainly the first to make one.

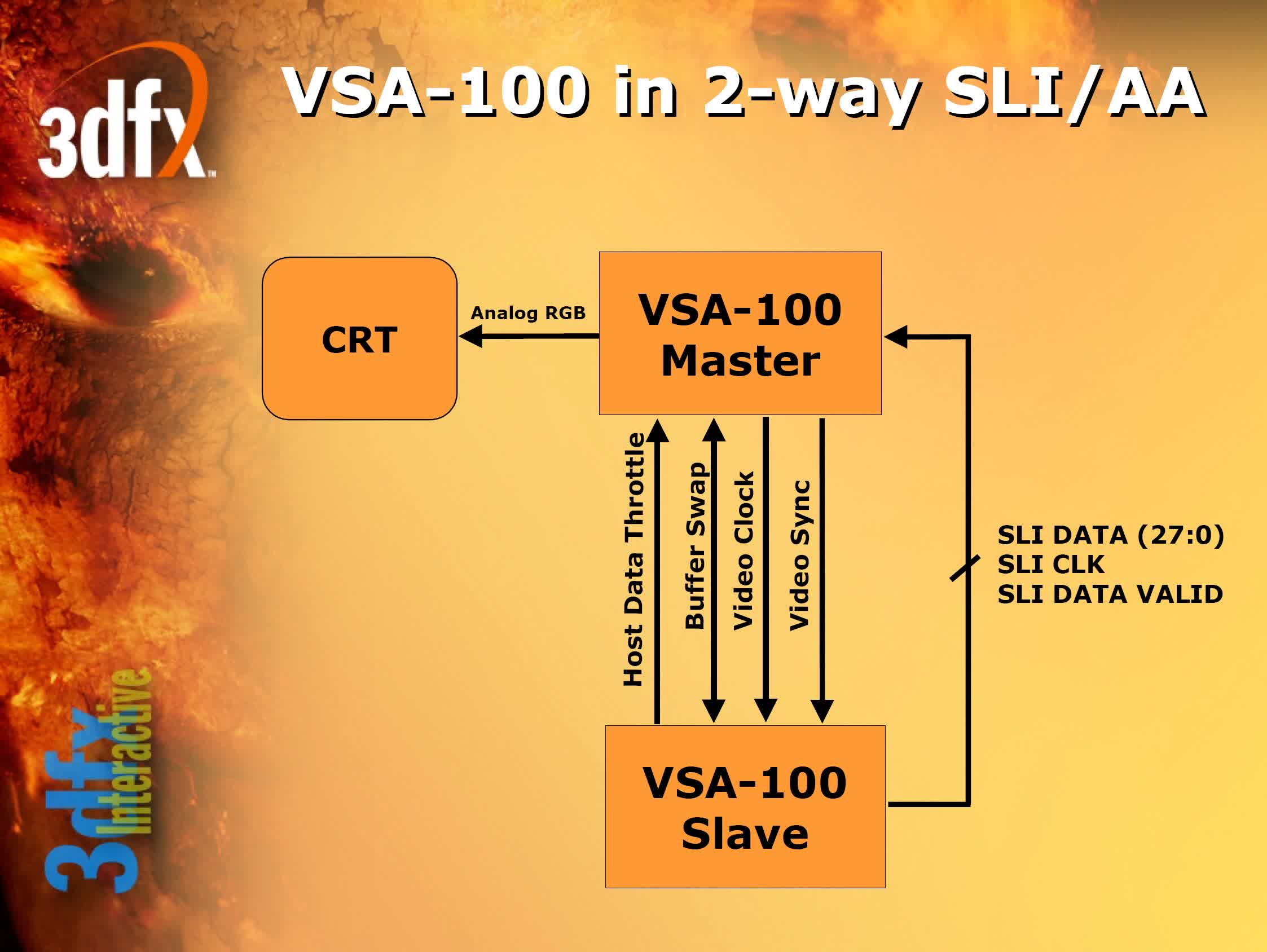

Another company also interested in exploring multi-GPU products was 3dfx. They had already pioneered a method of linking two graphics cards together (known as scan line interleaving, SLI) with their earlier Voodoo 2 models.

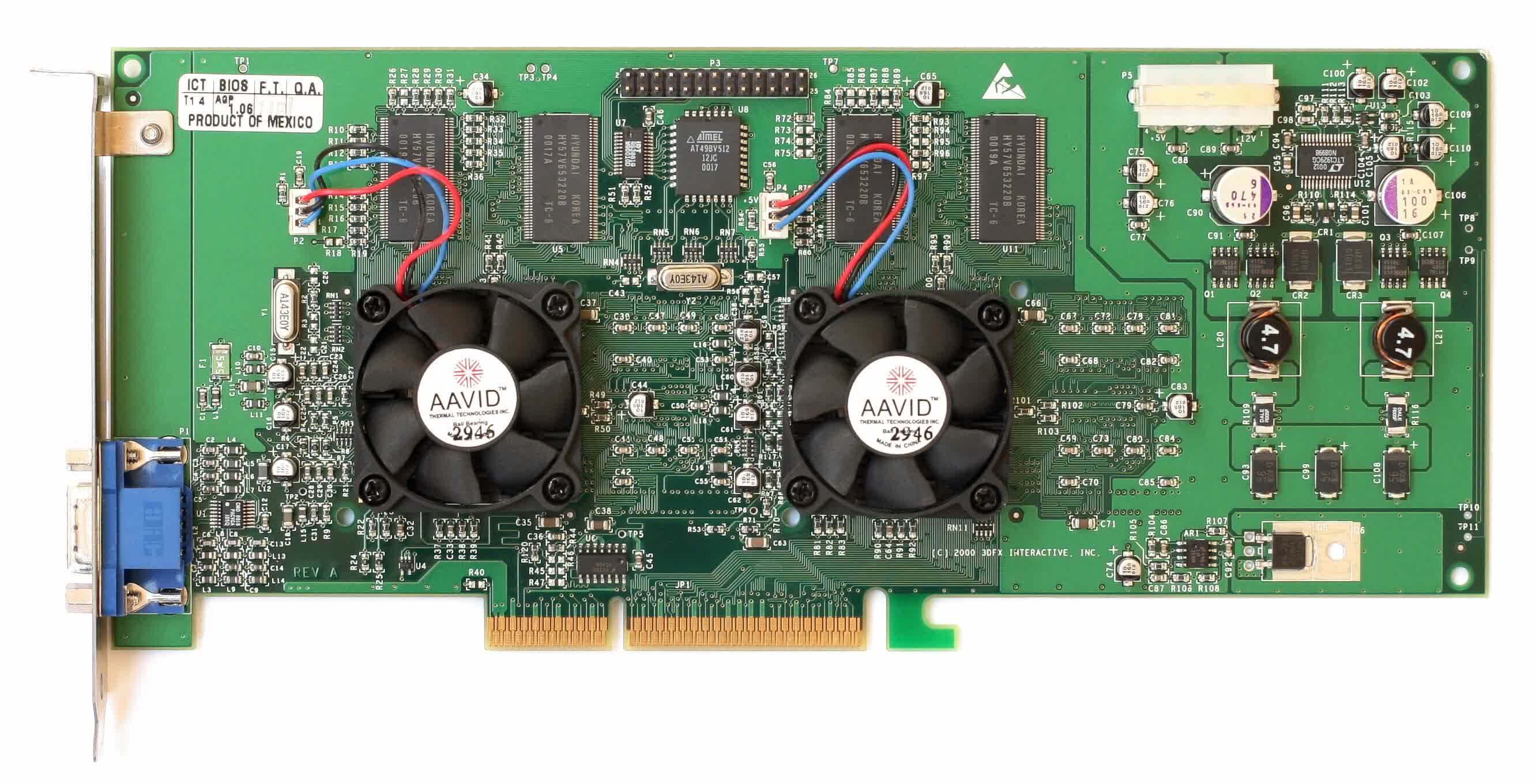

Roughly a year after the appearance of ATI's MAXX, they brought the Voodoo 5 5500 to the masses, retailing at just under $300.

The VSA-100 chips on the board were twin pipelined like the Rage 128 Pros, but supported more features and had a wider memory bus. Unfortunately the product was late to market, and not entirely problem free; worse still, it was only a little better than the GeForce 256 DDR and quite a lot slower than its successor, the GeForce 2 GTS (which was also cheaper).

While some of the performance deficit could be blamed on the graphics processors themselves, the interface used by the card didn't help. The Accelerated Graphics Port (AGP) was a specialized version of the old PCI bus, designed exclusively for GPUs.

While it greatly improved how data could be transferred to and from the card, by having a direct connection to the system memory, the interface wasn't designed to have multiple devices using it.

So multi-GPU cards either needed an extra chip to act as the device on the AGP, manage the data flows to the GPUs, or as in the case of the Voodoo 5, one of the GPUs would handle all of those tasks. Generally, this created problems around bus stability and the only way to get around the issues would be to run the interface at a lower rate.

ATI continued to experiment with dual GPU products, although few were ever publicly released, and 3dfx was eventually bought by Nvidia before they had chance to improve their VSA-100 chips. Their SLI technology was incorporated into Nvidia's graphics cards, although only in name – Nvidia's version was quite different underneath the hood.

Even XGI Technology, a spin-off from the venerable chipset firm SiS, tried to join the act with their Volari Duo V8 Ultra cards. Sadly, despite the early promise of the hardware, the performance didn't match up to the product's ultra cool name!

The Volari Duo did nothing to save XGI's rapid demise, and ATI had far more success with their single GPU products, such as the Radeon 9800 XT and 9600 Pro.

So you'd think that everyone would just give up – after all, who would want to try to sell an expensive, underwhelming graphics card?

Nvidia, that's who.

The Race for Excess

After briefly dabbling with dual GPU cards in 2004 and 2005, the California-based graphics giant released more serious efforts in 2006: the GeForce 7900 and 7950 GX2.

Their approach was unconventional, to say the least. Rather than taking two GPUs and fitting them to a single circuit board, Nvidia essentially took two GeForce 7900 GT cards and bolted them together.

By this time, AGP had been replaced by PCI Express, and despite this interface still being point-to-point, the use of a PCIe switch allowed multiple devices to be more readily supported on the same slot. The upshot of this is that multi-GPU cards could now run with far more stability than they ever could on the older AGP.

Despite launching at $599, the likes of the 7950 GX2 could often be found cheaper than that, and more importantly, it was less expensive than buying two separate 7900 GTs. It also proved to be fastest consumer graphics card on the market, at the time.

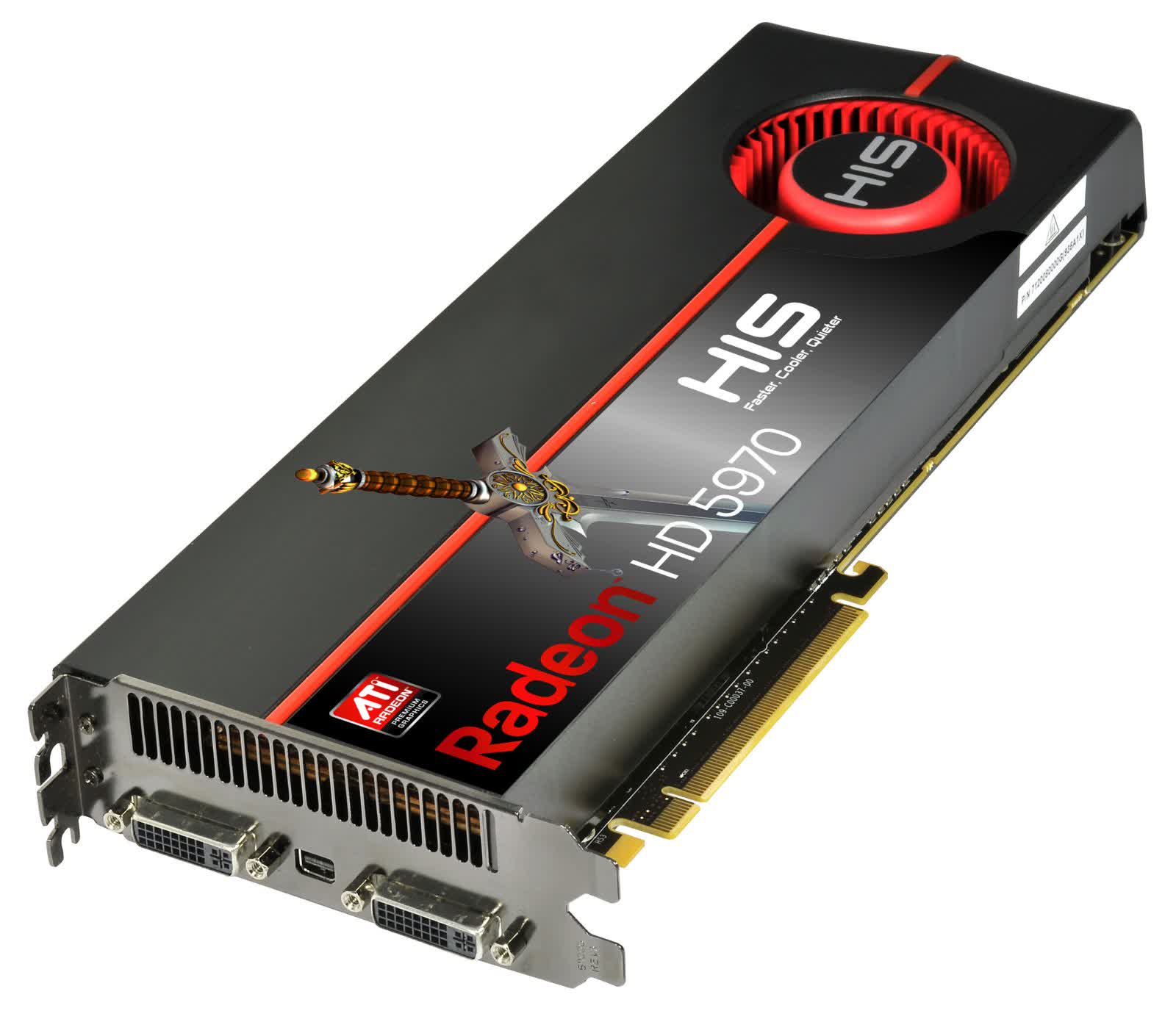

For the next 6 years, ATI and Nvidia fought for the GPU performance crown, releasing numerous dual processor models, of varying prices and performance. Some were very expensive, such as the Radeon HD 5790 at $699, but they always had the speed chops to back up the price tag.

Some were quite reasonably priced: Nvidia's GeForce GTX 295, for example, launched at $500. Not overly affordable in 2009, but given that then year-old GTX 260 had an MSRP of $450, and the fact that the GTX 295 outperformed two of them made it a relative bargain.

Technology races typically lead to excess, though, and while enthusiast-level graphics cards have always been draining on the wallet, Nvidia took it to a whole new level in 2012.

Up to that point, dual GPU cards typically comprised of GPUs from the lower section of the top-end spectrum, to keep power and heat levels in check. They were also priced in such a way that, while clearly setting them apart from the rest of product range, the cost-to-performance ratio could be justified.

However, at $999, the GeForce GTX 690 was not only twice the price of their next-best offering, the GeForce GTX 680, but it performed exactly the same as two 680 cards connected together (both setups using a modified SLI method) – and worse than two AMD Radeon HD 7970s (which used AFR).

Its only real advantage was power consumption, being around 300 W at most – in contrast, just one HD 7970 used up to 250 W. But the escalating dollar tags and energy requirements hadn't quite reached their zenith.

Exit The Dragon(s)

Nvidia focused heavily on improving their single GPU products after the GTX 690, but in the middle of 2014, they gave us what would be their last multi-GPU offering – for now, at least.

The GeForce GTX Titan Z, despite its colossal 'single card' performance, was an exercise in hubris and greed. Coming in at just shy of three thousand dollars, nothing about it made any sense whatsoever.

Sporting the same GPUs as found on the GeForce GTX 780 Ti, but clocked a little slower to keep the power consumption down, it performed no better than two of those cards running in SLI – and their launch price was $699.

In other words, Nvidia was charging you more than double the price for the Titan Z! Once again, its only saving grace was that the maximum power draw was less than 400 W. Of course, this isn't a bonus at all when the rest of the product is so ridiculous, but it was better than the competition.

Power demands had been an issue for AMD's dual GPU cards for a number of years, but they reached insanity levels in 2015 with the Radeon R9 390 X2.

Priced at an entirely unreasonable $1,399, it had a thermal design power (TDP) rating of 580 W. To put that into some kind of perspective, our 2015 test system for graphics cards was drawing less than 350 W for the entire setup – including the graphics card.

By this stage, Nvidia had bailed out of the multi-GPU race altogether, and even AMD only tried with a few more models, most of which were aimed at the professional market.

But surely there must still be a market for them? After all, Nvidia's $1,000+ GeForce 2080 Ti sold very well for such an expensive card, so it can't just be an issue of price. It's a similar situation with power: the Radeon Pro Duo from 2016 had a TDP of 350 W, 40% less than the R9 380 X2.

And yet, the only one you can now buy can be found in Apple's Mac Pro – an AMD Radeon Pro Vega II Duo, for an eye-watering $2,400. The reason behind the apparent death of the multi-GPU graphics card lies not in the products themselves (although that does play a role), but in how they're used.

It's Not You, It's Me

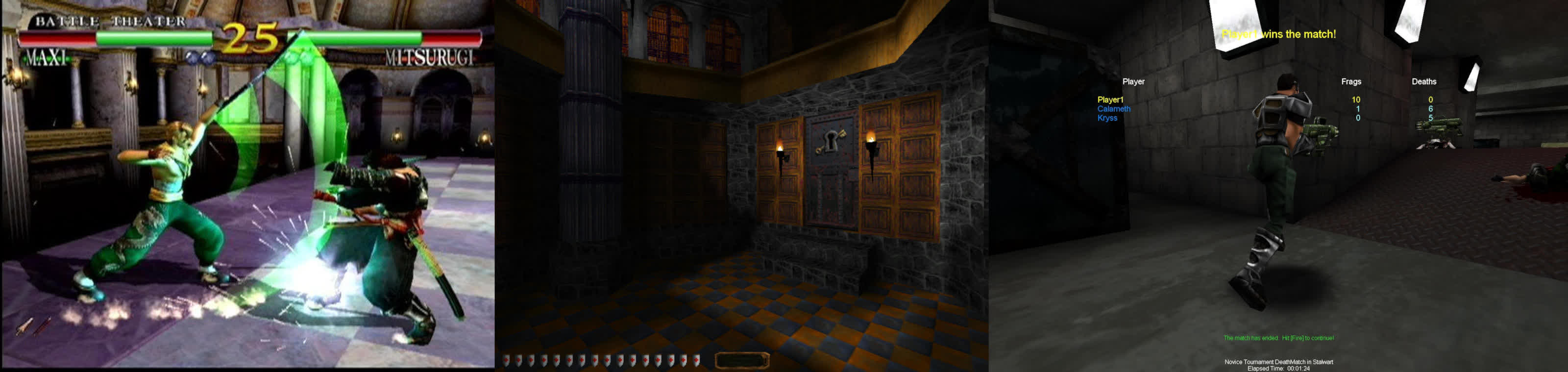

When the Rage Fury MAXX appeared in 1999, true 3D games (using polygons and textures) were still relatively new. id Software's Quake was only 3 years old, for example.

Consoles such as the Sega Dreamcast had titles with some seriously impressive graphics, but PC games were generally more muted. All of them used similar rendering techniques to produce the visuals: simply lit polygons, with one or two textures applied to them. Graphics processors then were limited in their capabilities, so the graphics had to follow suit.

But as the hardware began to advance, developers started to employ more complex techniques. A single 3D frame might require multiple rendering passes to produce the final image, or the contents of one pass might be used in other frames.

The result of all these graphical enhancements was that the workload for a GPU become increasingly more variable.

The user of a multi-GPU card (or multiple cards, for that matter) will almost certainly experience a higher overall frame rate, compared to using just one GPU, but the increase in difference between the frames manifests in the form of micro stuttering.

This is where the frame rate drops right down, for a very brief period of time, before bouncing back up and then repeating this pattern throughout the scene. It's so brief that it's often difficult to pick up, even through careful benchmarking, but it's distinctly noticeable during gameplay.

Micro stuttering is a problem inherent to multi-GPU systems and while there are various tricks that can be employed to reduce its impact, it's not possible to remove it entirely.

In 2015, Microsoft released Direct3D 12, a graphics API used to simplify the programming of game engines. One of the new features offered was improved support for multiple GPUs, and while it could potentially remove a lot of the issues, it needs to be fully implemented by the developers.

Given how complex a modern title is, the extra task of adjusting the engine to better utilize a dual GPU card is unlikely to be taken up many teams – the user base for such products is going to be very small.

And it's not just the games that need supplementary work to make them properly utilize multiple GPUs. Roughly 8 years ago, AMD and Nvidia started to introduce CrossFire/SLI profiles to their drivers. Initially these were nothing more than hardware configurations that were activated upon detecting a specific title, but over the years, they began to expand their purpose – for example, certain shaders might be replaced before being compiled by the driver, in order to minimize problems.

Reading the release notes from any set of modern drivers will clearly show the addition of new multi-GPU profiles and remaining bugs associated with such systems.

Fewer is more

But the real killer of multi-GPU cards isn't the software requirements nor the micro stuttering: it's the rapid development of single chip models that's stolen their thunder.

The 2014 Titan Z boasted peak theoretical figures of 5 TFLOPS of FP32 calculation rate and 336 GB/s of memory bandwidth, to name just two. Just 4 years later, Nvidia released the GeForce 2080 Ti which boasted values of 13.45 TFLOPS and 616 GB/s for the same metrics – on a single chip, and for less than half the cost of the Titan Z.

It's the same story with AMD's products. The Radeon RX 5700 XT launched in 2019, five years after the appearance of the R9 380 X2, and for just $400, you got 91% more FP32 throughput and 30% more bandwidth.

So are dual or quad GPU cards gone for good? Probably, yes. Even in the professional workstation and compute markets, there's little call for them, as it's far simpler to replace a single faulty GPU in a cluster, than having to lose several chips at the same time.

For a time, they served a niche market very well, but the excessive power demands and jaw dropping prices are something that nobody wants to see again.

Farewell and thanks for all the frames.

Shopping Shortcuts:

- Nvidia GeForce RTX 3080 on Amazon

- AMD Radeon RX 5700 on Amazon

- AMD Ryzen 9 3900X on Amazon

- AMD Ryzen 5 3600 on Amazon