The story of adaptive sync starts with a problem. For years, displays were only capable of refreshing at a constant rate, usually 60 times per second (60 Hz), to keep the internal hardware simple and effective. However on the GPU side, games rarely render at a constant rate: due to complexities in most 3D scenes with animation, render rates often fluctuate wildly, hitting 60 FPS at one moment, 46 FPS at the next, 51 after that, and so forth.

The solution to this problem was through one of two methods. The first was to refresh the display with whatever the GPU had rendered at that moment, but that caused tearing as the GPU had often only rendered a fraction of a frame by the time the display was ready to refresh. Although content on the display was recent, and mouse input was fast, tearing was unsightly and often completely ruined the on-screen picture and overall experience.

To fix tearing, v-sync was invented. Rather than outputting whatever the GPU had rendered when the display was ready to refresh, v-sync would repeat whole frames in an instance where the GPU hadn't rendered the entirety of the next frame. This prevented tearing, as frames displayed were always complete, but could introduce stutter and input lag whenever a frame needed to be repeated. V-sync also took a slight toll on performance, which understandably isn't ideal.

For gamers there was always the tricky decision as to whether v-sync should be enabled or disabled. Unless you were after the fastest input, v-sync was typically recommended for situations where your GPU was rendering frames faster than the display's refresh rate, as it would cap the render rate to match the refresh rate. Keeping it off reduced stuttering for render rates below the display's refresh rate (although didn't remove it entirely), which, with no performance hit, was a better choice for these situations unless tearing was particularly bad.

However, as you might have noticed, there are issues no matter which v-sync option you choose, especially for render rates slower than display refresh rates (eg. 40 FPS on a 60 Hz display). With the recent explosion of 4K displays on the market, and the lack of GPU power to drive these displays, gamers often had to choose between tearing or stuttering for their gameplay that rarely reached the ideal 60 FPS mark.

This was the case until Nvidia announced G-Sync. Through the inclusion of a special piece of proprietary hardware inside the display, the refresh rate of the panel can adaptively match the GPU's render rate. This means that there is never tearing or stuttering, as the display simply refreshes itself when there is a new frame ready to be displayed. In my brief time using G-Sync, the effects are magical: 40 FPS gameplay appears just as smooth as 60 FPS gameplay, devoid of most stutter and jank that makes sub-60 FPS games seem choppy.

There are several issues with G-Sync, though, that make it not ideal for a portion of the PC gaming population. For starters, the proprietary hardware module necessary for its functionality restricts its use to Nvidia graphics cards. As Nvidia sells the modules to display manufacturers, the cost of implementing G-Sync into displays is high, often pushing the price of compatible monitors up by $200-300. And it reduces flexibility, as monitors can't apply any post processing or accept other inputs.

FreeSync is AMD's alternative to G-Sync, which the company hopes will be a better adaptive sync experience in the long run. While G-Sync has been available on the market for over a year, FreeSync hits the market today as a cheaper alternative with all the same functionality and more. Sounds promising, doesn't it.

Unlike G-Sync, FreeSync doesn't require a dedicated, proprietary chip to function. Instead, FreeSync uses (and essentially is the basis of) the VESA DisplayPort 1.2a Adaptive Sync standard. This means that display manufacturers are free to use whatever scaler hardware they like to implement FreeSync, so long as it supports the standard. As multiple scaler manufacturers produce FreeSync-compatible chips, this creates competition that drives prices down, which benefits the consumer.

To use FreeSync, you'll need a compatible monitor connected to a compatible GPU via DisplayPort, which is identical for G-Sync. In the case of AMD's equivalent, the only supported GPUs at this stage are GCN 1.1 or newer parts, in other words the Radeon R9 295X2, R9 290X, R9 290, R9 285, R9 260X and R9 260. Also supported are AMD's APUs with integrated GCN 1.1 graphics, which are the A6-7400K and above. While the list of discrete GPUs that support FreeSync is currently smaller than the list for Nvidia/G-Sync, it's nice to see AMD hasn't left out their APU users.

FreeSync supports variable refresh rates between 9 and 240 Hz, although it's up to manufacturers to produce hardware that supports a range this large. At launch, the monitors with the widest range support 40 to 144 Hz. Meanwhile, G-Sync currently supports 30 to 144 Hz, with all monitors supporting at least the lower bound of that figure. This does give some monitors a specification one-up at this stage, though I'd expect FreeSync monitors with wider refresh rates to hit the market after issues with flickering below 40 Hz is resolved.

FreeSync also allows display manufacturers to implement extra features where G-Sync does not. Monitors can have multiple inputs, including HDMI and DVI, which is great for flexibility even if adaptive sync is only supported through DisplayPort. Displays can also include color processing features, audio processors, and full-blown on-screen menus. This is basically everything you'd expect from a modern monitor with the addition of FreeSync as the icing on the cake.

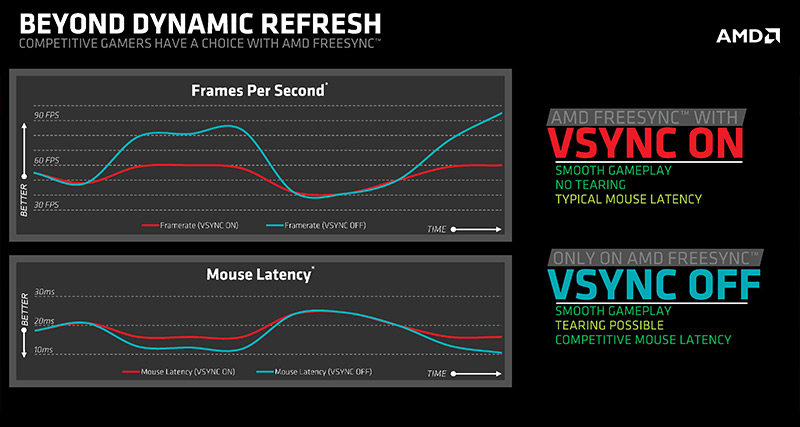

There are also some differences in the way FreeSync is implemented on the software side. When GPU render rates slide outside the refresh rate of the monitor, G-Sync will always revert to using v-sync to fix the render rate to the refresh rate. With FreeSync, you can choose to use v-sync or not, giving users an option to have lower input lag at the expense of tearing.

As for situations where the GPU render rate goes above the maximum refresh rate of a monitor, or dips under the minimum refresh rate, there has been some confusion as to how G-Sync and FreeSync handle these cases. To clear up the differences, I'll start with Nvidia's implementation. Whenever frame rates slide outside the variable refresh window of a monitor, G-Sync reverts back to v-sync. As an example, for a 30-144 Hz monitor, going above 144 Hz would see the render rate match the refresh rate at 144 FPS. Going below 30 FPS caps the refresh rate at 30 Hz with frames repeating as we've come to expect from v-sync.

AMD's implementation differs slightly in that you can choose to have v-sync enabled or disabled in situations where render rate goes above or below the variable refresh window. Having it enabled delivers the same experience as G-Sync: capped render rate when attempting to exceed the maximum display refresh, and capped refresh rate at the minimum when dipping under. This means when running a game at 26 FPS on a minimum 40 Hz monitor, some frames will repeat to achieve a 40 Hz refresh.

If you choose to go with v-sync disabled, it will act exactly as you expect. You'll get tearing at render rates above and below the variable refresh window, with the display refreshing at either the maximum or minimum rate depending on whether you've gone over or under the limit.

For testing, AMD sent me LG's latest 34UM67, which is a 34-inch, 21:9 monitor with a resolution of 2560 x 1080. Before I give my impressions on FreeSync, let's take a look at the monitor.