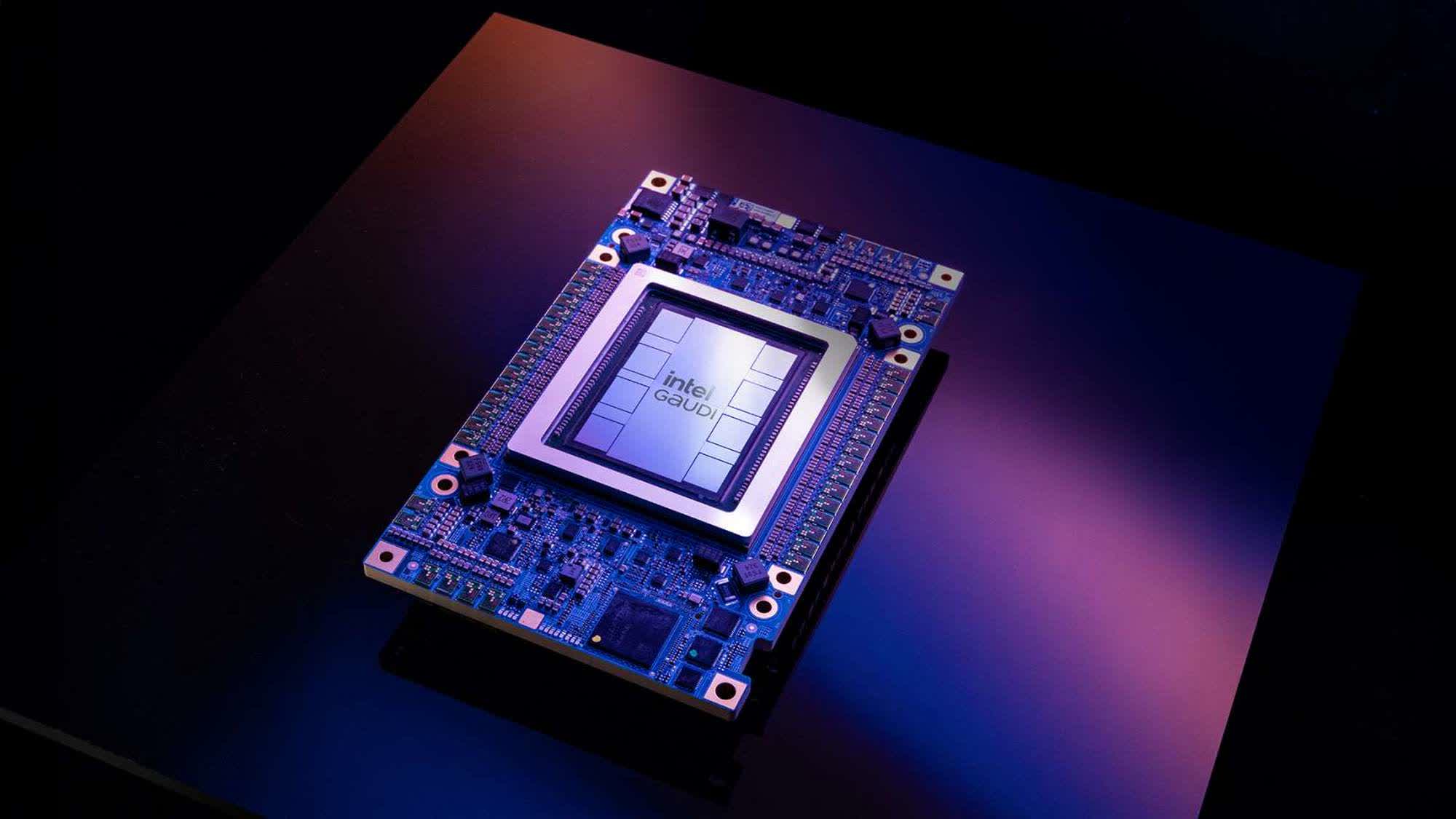

What just happened? At its Vision 2024 conference in Phoenix, Arizona, Intel unveiled the Gaudi 3 AI accelerator that the company claims will offer 50 percent better performance in inference tasks than Nvidia's H100. The Gaudi 3 has been teased at Intel's AI Everywhere event last December, where CEO Pat Gelsinger said that it would be available soon to take on Nvidia's data center GPU and the competing AMD MI300 accelerator.

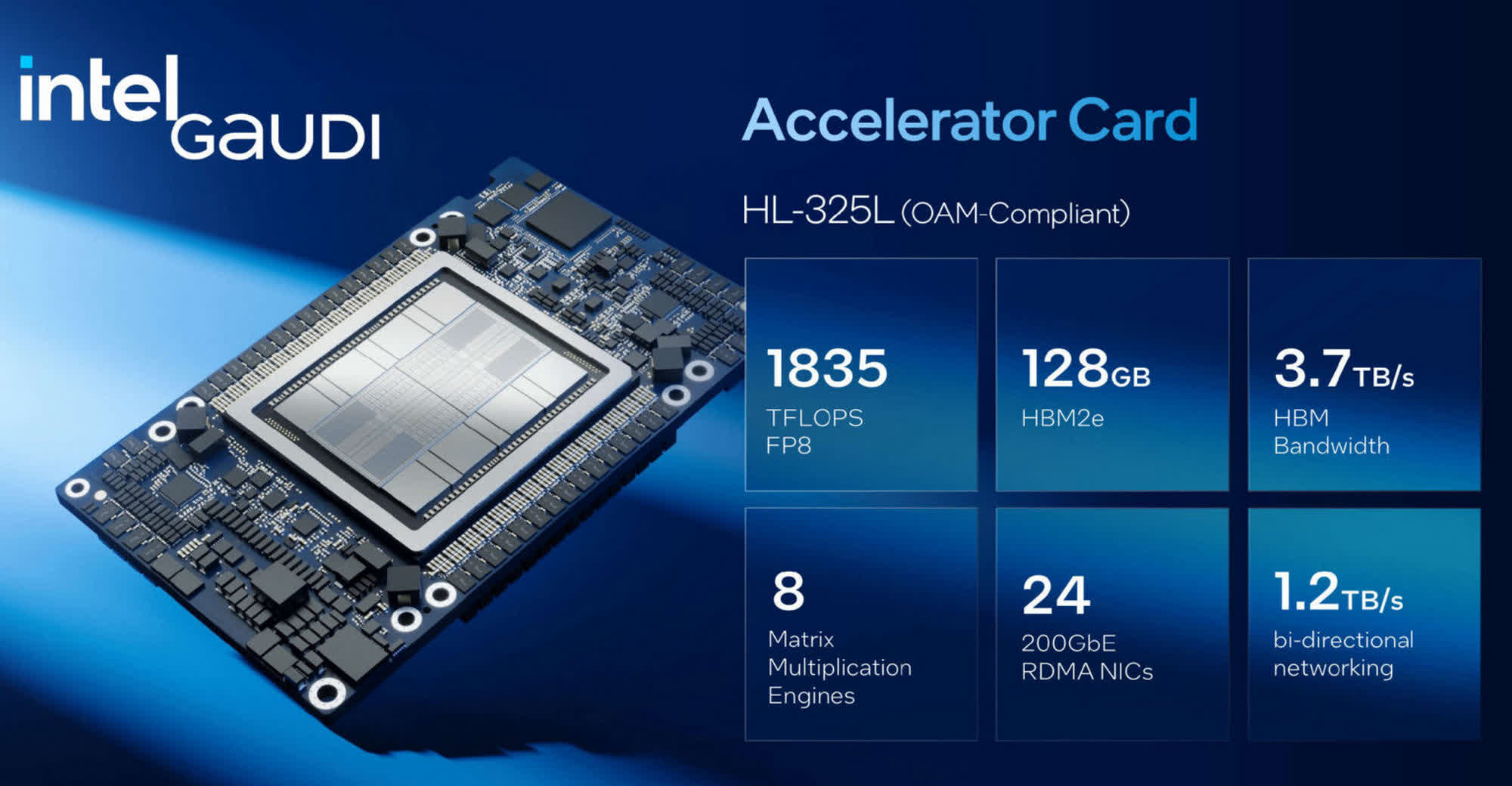

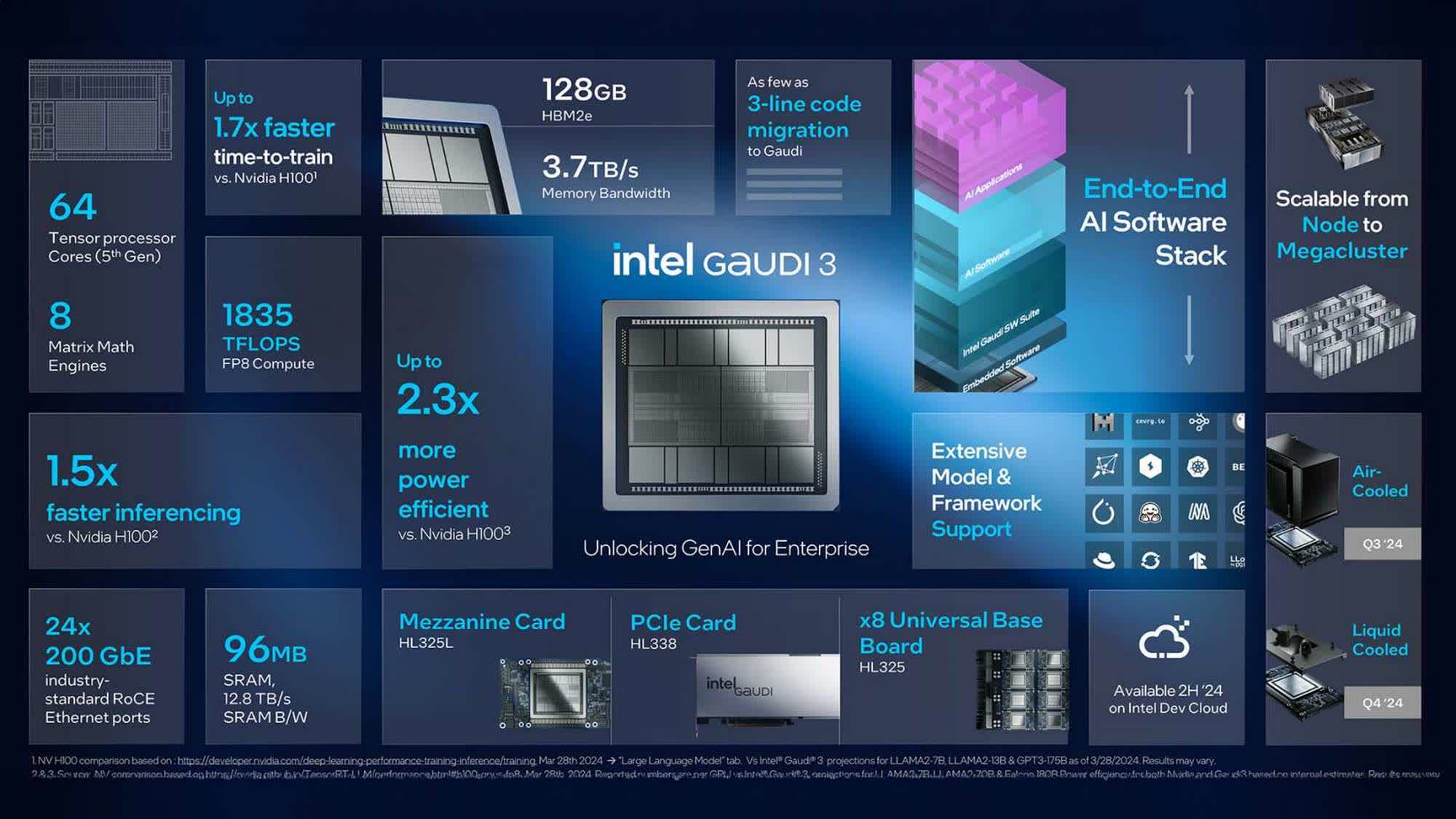

The new chip, which succeeds the Gaudi 2, combines two 5nm TSMC dies and features 64 5th-gen Tensor cores, 128GB of HBM2e memory at 3.7Gbps, and 3.7TB bandwidth per second.

The numbers suggest substantial improvements over Gaudi 2, which came with 24 Tensor cores, 96GB of HBM2e memory at 3.27Gbps, and 2.45TB/s of bandwidth. The Gaudi 3 also includes 96MB of SRAM with 12.8TB/s bandwidth.

Other critical specs include 128GB of VRAM, 1,835 TFLOPS of performance in FP8 and BF16 matrix, and 900 watts of power consumption on air. In comparison, Gaudi 2 has 96GB of VRAM, offers 835 TFLOPS in FP8 Matrix, 432 TFLOPS in BF16 Matrix, and consumes 600W of power.

Additionally, every Gaudi 3 accelerator includes twenty-four 200Gb Ethernet ports to provide flexible and open standard networking. It also comes with a PCIe add-in card that the company says will aid workloads such as fine-tuning, inference, and retrieval-augmented generation (RAG).

According to Intel, the third-generation Gaudi chip will deliver 4x AI compute for BF16, 1.5x increase in memory bandwidth, and 2x networking bandwidth compared to its predecessor, thereby offering "significant performance improvements" for training and inference tasks on leading GenAI models.

Comparing the Gaudi 3 with the Nvidia H100, Intel claims that its latest AI accelerator is 50 percent faster across Llama2 7B and 13B parameters, as well as GPT-3 175B parameter models. It is also said to offer 50 percent faster inference and 40 percent greater inference power efficiency across Llama 7B and 70B parameters, and Falcon 180B parameter models.

Intel adds that Gaudi 3 will offer 30 percent faster inferencing on Llama 7B and 70B parameters, and Falcon 180B parameter models when compared to the Nvidia H200. Benchmarks provided by Team Blue also suggest that Gaudi 3 will be between 40 and 70 percent faster for AI training than the H100 depending on the LLM, but how it performs in real life remains to be seen.

The first samples of Gaudi 3 are already being provided to Intel's partners, with volume production availability scheduled for the second half of this year. According to Intel's roadmap, air-cooled variants of the Gaudi 3 will start shipping in Q3 2024, while the liquid-cooled models will only start going out in Q4.